Going nomad

I got rid of all my stuff and live off only a suitcase - staying in Airbnbs to explore different areas 🏡🚀 excited to live nomad life pic.twitter.com/nWGf7AFKiZ

— Felix Krause (@KrauseFx) November 9, 2017

Update: Check out the One Year Nomad post from 2018

Background

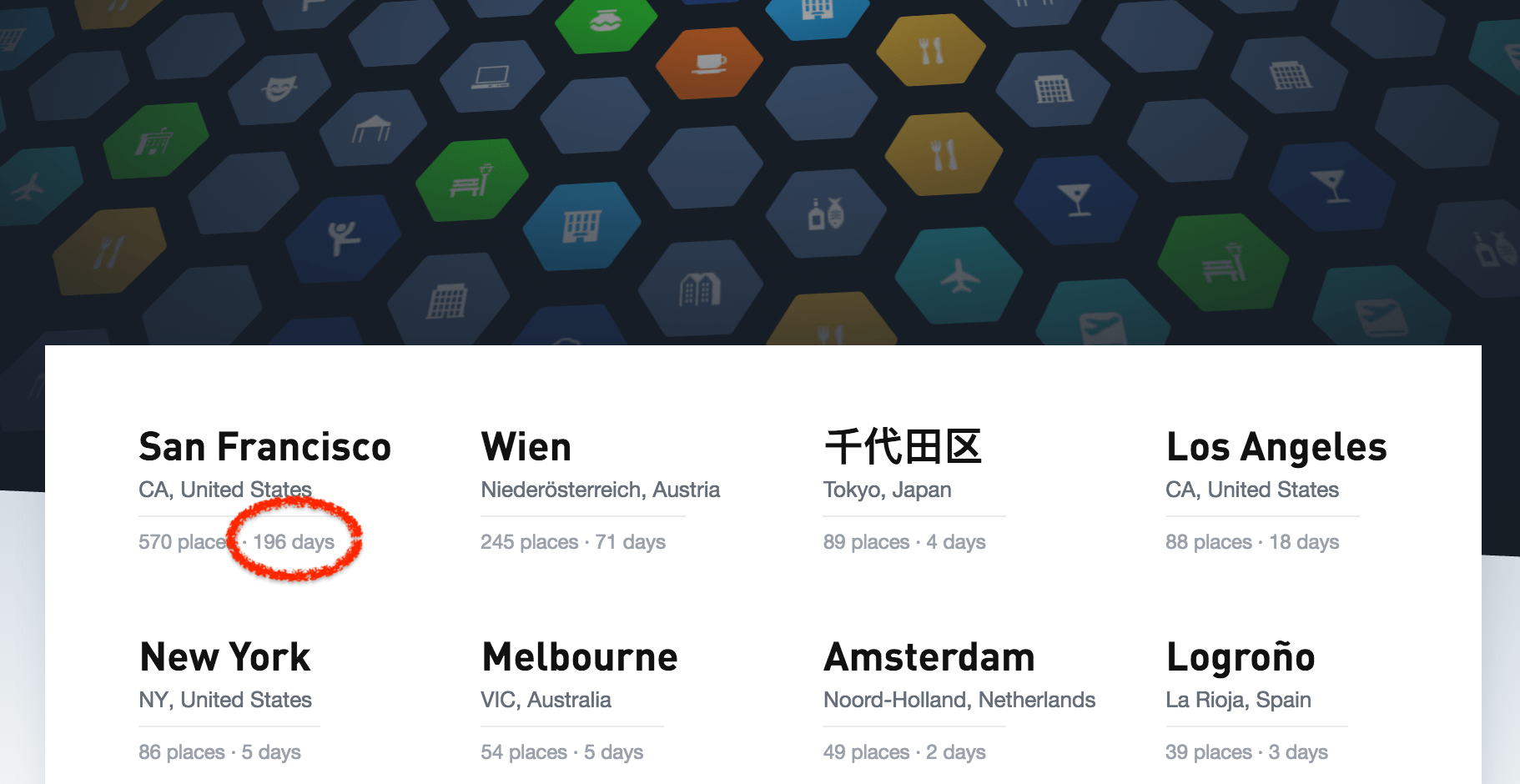

I moved to San Francisco summer 2015 to join Twitter. I lived in a furnished apartment for my first year, which I really enjoyed, as I didn’t have to buy all the essentials myself after moving across the globe into a new country.

After my 1 year lease, I decided to do what “grown-ups” are supposed to do: get their own apartment, buy furniture, decorate the place, and make it your home. After living in my little studio for about 1.5 years, I noticed a few things:

- In 2017 I only spent about 200 nights in my apartment, causing me to still pay about 5 months worth of San Francisco rent without actually living there (the average monthly lease for a studio apartment is about $3,000 + utilities, resulting in about $15,000 of my after tax money being lost)

- While I enjoy having my own space, I never invested enough time and effort into making it nice: Until the day I moved out after 18 months, I still didn’t have enough closets for all my things and I had my clothes piled up in some corner

- I didn’t like being bound to one location in the city. In particular, in the common case of getting acquired by another company (#justSFthings), your commute changes, and you can’t just move around

- I didn’t like the fact that I was always surrounded by the same places and things became routine. Same subway station, same spots you walk by every day, same views, same commute, etc. after a month it gets boring and I need a change.

The idea

Ever since I first started reading @levelsio’s blog in 2014, about living out of just a backpack, and traveling across the world, while working on his own startups, I was fascinated by the idea. However I always assumed it doesn’t work if you have a full-time job at a large company like Twitter or Google.

2014 was also the time I met @orta, who told me about his first year in New York City, where he lived in a different neighborhood in a random Airbnb each month. This allowed him to see what NYC has to offer, and what area he liked the most. I loved the idea, and kind of knew I want to do this at some point in life.

Only in October 2017 I realized that combining those two things might actually just work.

Making the move

After living in San Francisco for 2.5 years, I wanted a change. With my lease ending in October, I decided to reduce my life to just

- 1 suitcase

- 1 carry-on luggage

- 1 backpack

and lived in an Airbnb in San Francisco until the winter holidays, for which I went back home to Austria. I got really lucky with my SF Airbnb, as I got it from Zeus Living a company that rents out apartments for people like me: rent a place per month, all utilities included, and enough space with a desk to get work done.

For the last 6 months I’ve lived the nomad life, with just the things listed above. So far I’ve stayed in 6 different neighborhoods in NYC, 2 areas in SF and spent time with my family in Austria for New Year’s. While I plan flights ahead of time due to costs, I don’t book places longer than a month ahead, something that took some time getting used to.

Spending time in a single city

While being a different city each month might sound like a dream to many people, I learned it comes with many downsides:

- It’s hard to build up a social circle of close friends

- It’s hard to really get to know a city, and make use of all the things it has to offer

- It’s hard to learn more about the culture

- Cities change with seasons, a summer is usually quite different than a winter

- It’s stressful changing cities too often

Last year I spoke at conferences in 9 cities. I knew I wanted to fly less in 2018.

In January 2018, we started the new fastlane.ci project, which requires us to work closer with other Google teams, that are partially based in New York. I used that opportunity to “move” to NYC. So while I move to a different Airbnb every week, I do so within the same city. I grew up in a village with a population of less than 2,000, with not a single traffic light. Living in New York has been an amazing experience, with almost as many people living here, as in the whole country of Austria.

For now, this seems like the perfect balance for me personally: Not getting bored by day to day routine (e.g. same commute) by moving to a new Airbnb every week, but also being able to hang out with the same friends, and get to know the whole city. Long term, I’ll switch to a monthly cycle for even less overhead.

Frequently asked questions

How do you handle physical mail?

Online orders: I’m lucky that I can use the Google office to order from Amazon, and pick them up at the end of the work day. It’s offered in most major cities, and even allows me to order something for a specific location. For example: When I flew to Amsterdam I ordered an umbrella to the office, ready for me to pick up.

Letters: I use the VirtualPostMail service. They scan your letters, and sends them to you via email. If you need the original, you can tell them to forward them to your current address (or office in my case)

Money

My first thought was: Staying in Airbnbs must be more expensive than having my own place! For multiple reasons:

- Short term leases have to charge more to account for the vacant nights

- Airbnbs are furnished, and include some basic services and utilities

- Airbnb charges a pretty hefty fee for each booking

Circling back to the number of days I’m not at home for about 5 months each year, I realized that I don’t pay my (SF/NYC) rent when:

- I speak at a conference, and the organizers cover the hotel costs

- I go on vacation

- I go back home

- I crash on a friend’s couch / extra bed

- Google plans a team-offsite in a different location and covers the accommodation

- I take a red eye flight (a flight that leaves at about midnight, and lands in the morning)

Every night I don’t need to pay for my own place, I save about $100 after-tax money (NYC/SF)

Do you keep any physical memories

You can either ask your parents nicely to keep your things, or you can rent storage somewhere to keep it. I decided to bring my things back home to Austria, by just having an extra bag with me the first time I flew back.

How did you get rid of so much stuff?

I don’t care about physical things. If I were to lose all my devices, or all my clothes today, I’d buy new ones (probably the same ones). So getting rid of things was rather easy, and I personally never understood why it’s difficult, unless there are certain memories attached.

All I did was: Do I really need this? If the answer wasn’t an immediate yes, it’s a no. If I wanted to keep the “memory”, I made sure to take a picture before giving it away.

I created a spreadsheet with all the things I give away, and shared it with my friends on Facebook, from furniture, to kitchen stuff, to light bulbs, and I got “rid” of everything, as 2 of my friends just moved to a new place, and needed almost everything. The remaining things I donated or threw away if it wasn’t usable any more.

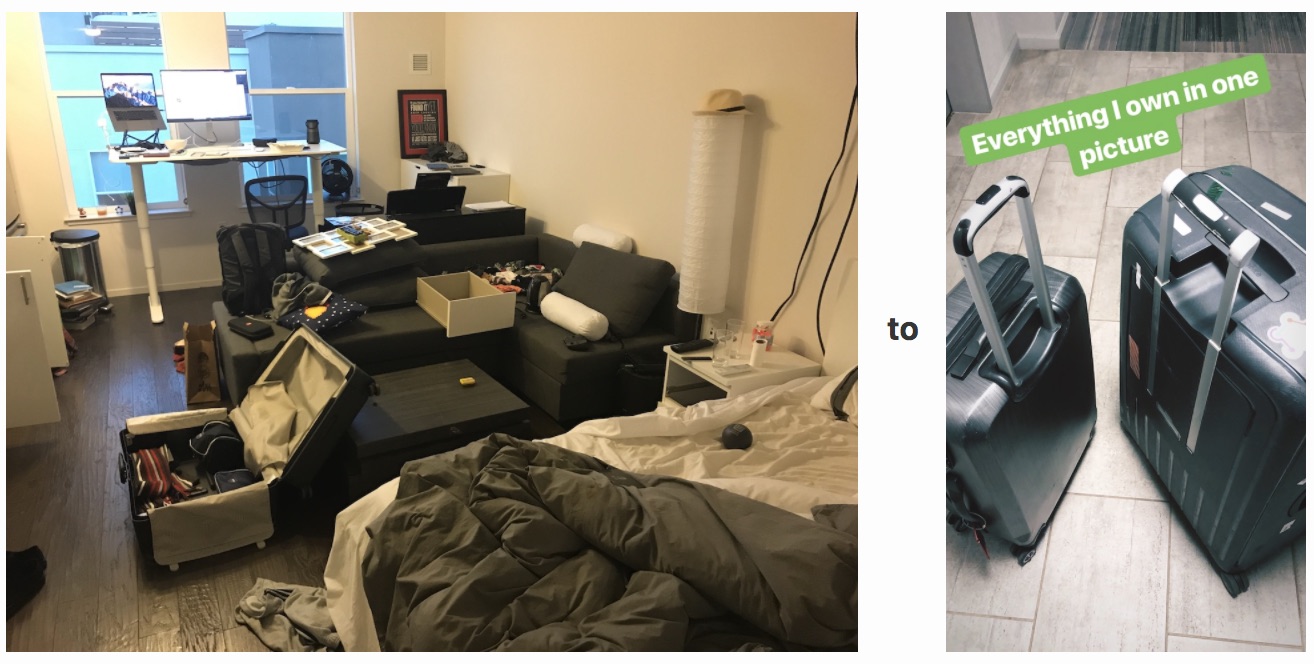

I went from

It is tricky though to buy new stuff, since I need to get rid of something else, for every single new item I buy. While my suitcases still have some space left, the weight limit of 22kg of most airlines is what I have to be careful about.

How do you keep things organized?

Those travel cubes have been pretty useful, I got a lot of them, for shirts, socks, underwear, electronics, etc. and can recommend them to anyone traveling.

Did you buy any travel gear for this?

Yes, there are some really cool things out there, that made my life easier:

- Portable Bose SoundLink Revolve+

- Roost Stand (MacBook stand for ergonomic working wherever you are in combination with Bluetooth keyboard + trackpad)

- Travel cubes to keep things organized

- Travel scale (I’m into fitness, and started tracking my weight every morning)

Is that “Minimalism Life”?

I’ve read a bit about this topic, including a great blog post of my friend about owning 200 things. I love the concept of owning just the things you really need, and forget the rest.

I also watched the “Minimalism” documentary on Netflix, which covers some of the concepts. Personally I don’t want to count things, or reduce life in areas I don’t want to. For example, I still carry around a rather high number of shoes with me, just because I like having the right shoes for the right occasion.

I’d argue the goal is to live a normal day-to-day life, when going to work or hanging out with friends, while still being very flexible.

Security concerns

That’s something I’ve been thinking more about recently: Breaking into an Airbnb is probably super easy, just stay in a place, copy the key and then steal from the next person, with the next person probably blaming the host or cleaning staff. Unfortunately it’s not common for Airbnbs to have a safe.

- I generally don’t own anything of real value, besides my MacBook

- I don’t leave anything valuable in my Airbnb but put them in the Google office instead

- All of my documents are stored online

- Hourly backups on different continents, using hard drives and custom cloud backup solutions, all end to end encrypted, on a total of 5 different locations

- Even if I were to lose 100% of my things including all my devices, I have a clear recovery path where I can recover my complete online identity, documents and everything else within less than 24 hours. For security reasons I can’t share more about this specific topic, but I can recommend everyone to draw a map of dependencies between the services/software/hardware you use, and how you can recover them step by step.

What else is nice about not having a fixed lease?

- If you stay at a place for just a week, you’ll never have to clean the apartment

- You learn how other people live day to day, e.g. how they set up their rooms and get a good idea of what you enjoy

- You learn more about yourself, like what things are important to you when it comes to having a living space

- When you own a home, you have to deal with maintenance, repairs and other things quite often. When renting an apartment building, at least some things are being taken care of by the owners. If you stay in Airbnb, there is literally nothing you have to worry about, if something doesn’t work, you notify the host and that’s it.

I wrote this post in Taipei, Taiwan, where I work remotely from the Taipei Google office for 2 weeks before heading to San Francisco.

Being able to escape the cold winter feels amazing 😎

Update: Check out the One Year Nomad post from 2018

Tags: digital, nomad | Edit on GitHub

How I use Twitter

Background

For most people, using the official Twitter client works fine. It’s optimized to show you new content you might be interested in, makes it easy to follow new users, and shows content that might be most relevant to you first. If you have an engineering mindset, chances are you want to be in control of what you see in your timeline.

I use Twitter to stay up to date with certain people. I want to hear about new projects or new content they published, new blog posts, thoughts of them, etc. I’m not interested in hearing political opinions, sport scores, etc, which I already have Facebook for. If I follow someone, I’ll read every single tweet from them. For the last 5 years, I didn’t miss a tweet in my timeline, so I have to be very careful about who to follow, and what content to see. So I set out to customize Twitter to achieve that goal, and to only see about 50-75 tweets per day.

Solution

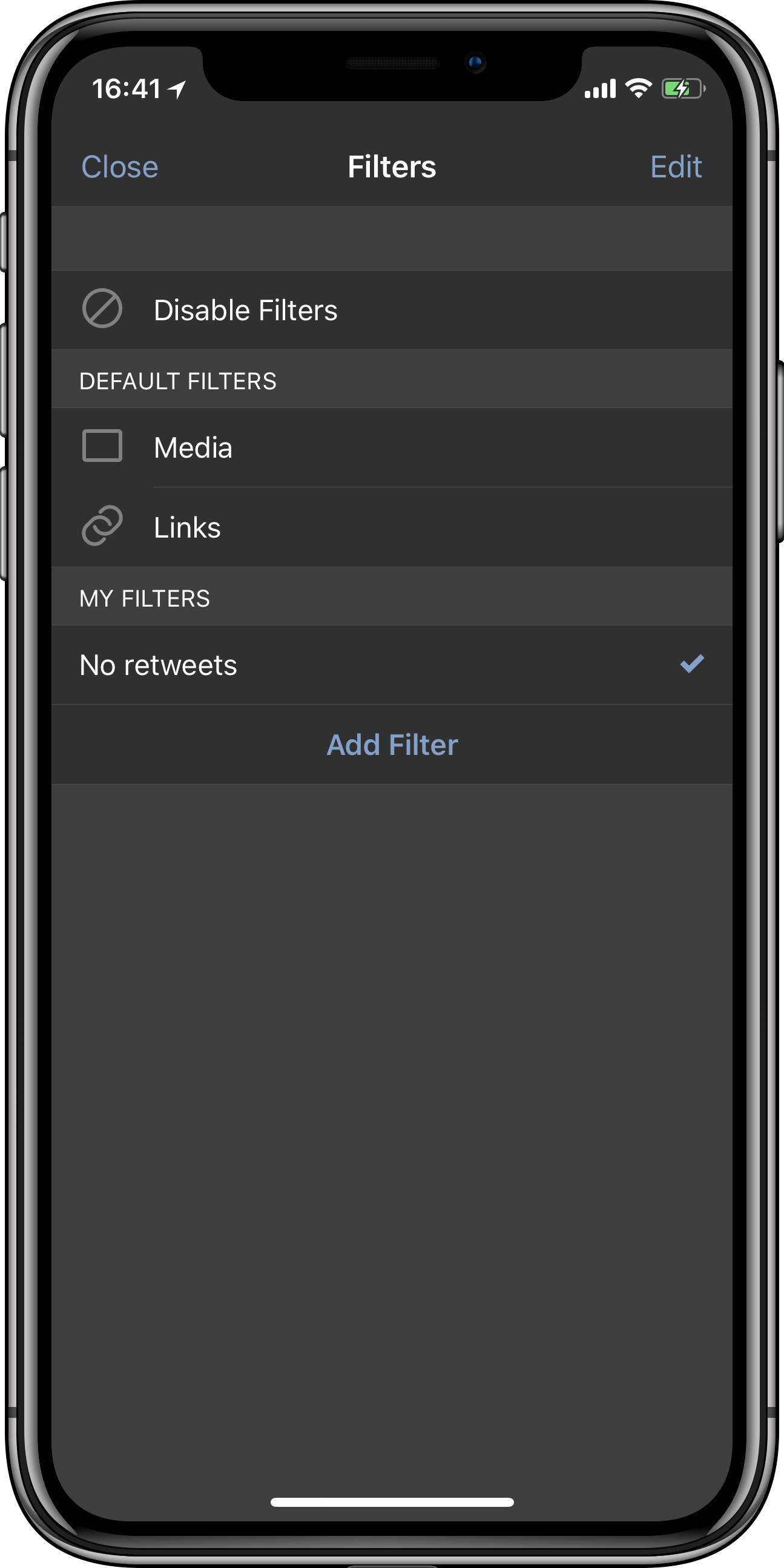

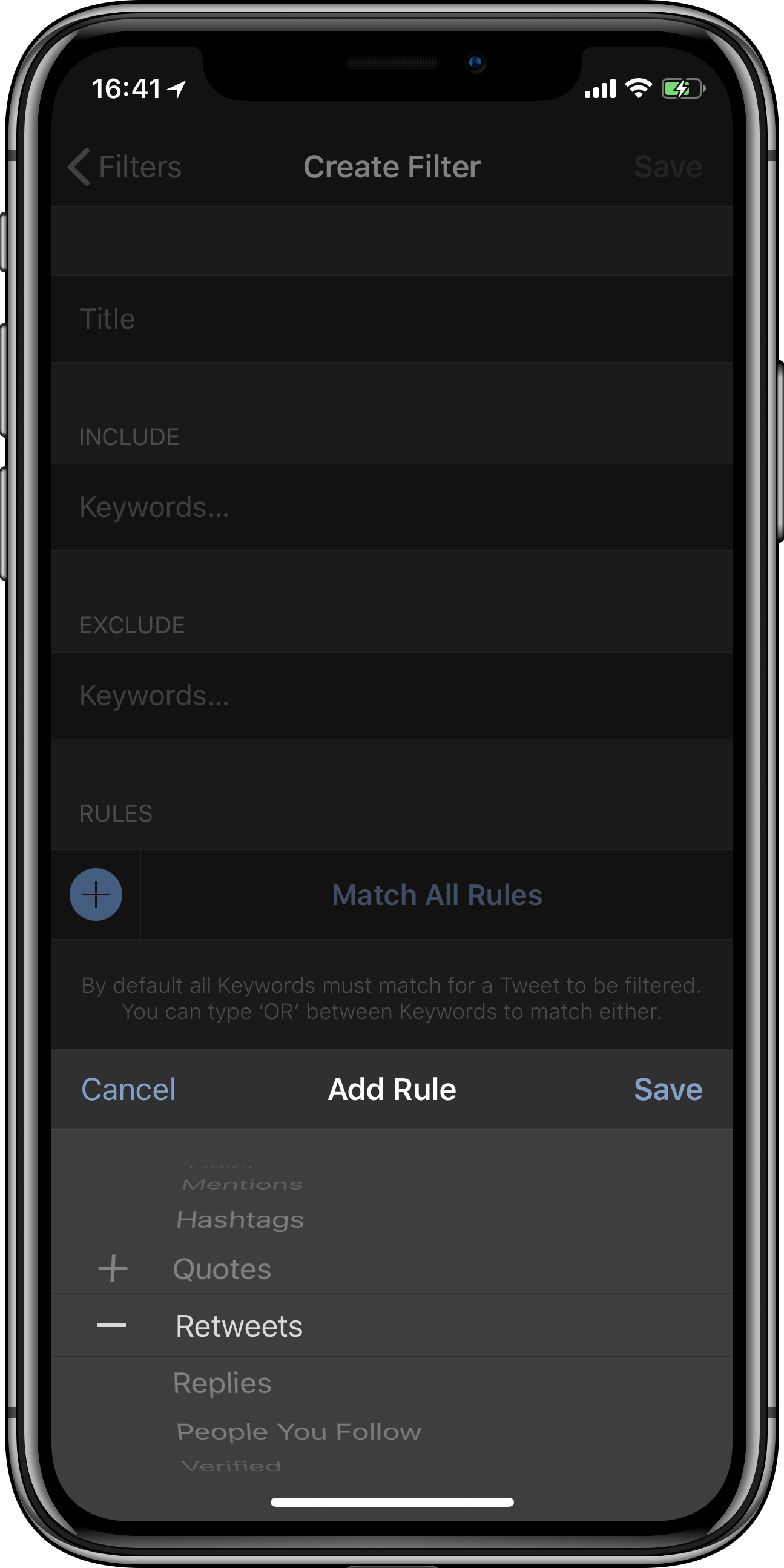

I’ve been using Tweetbot for the last few years, the technique described below might work with other third party Twitter clients also.

Muted Keywords

Very basic list of words, that as soon as a tweet contains one of them, it will be hidden, examples include:

- headphone jack

- drake

- podcast

- president

Muted users

I stopped using this feature, now that I use secret lists to follow people (see below), and disabled RTs. Muting users for a given time period or forever is useful for a few situations:

- Some users in your timeline might promote a product, so you can mute that product

- If a user is at a conference/event you’re not interested in, you can mute them for a few days

Muted Regexes

A very powerful feature of Tweetbot is to define a regex to hide tweet. I use it to hide annoying jokes like

remember \w+german word for \w+\w+ is the new \w+

or to hide tweets from people that think we’re interested about their airplane delays or #sports

(virgin|Virgin|@United|delta|Delta|JetBlue|jetblue)- twitter.com/i/moments

- For every #sports #event there are also custom-made mute filters (truncated):

(?#World Cup)(?i)((?# Terms)(Brazil\s*2014|FIFA|World\s*Cup|Soccer|F(oo|u)tbal)|(?# Chants)(go a l |[^\w](ole\s*){2,})|(?# Teams)(#(B....

Hide all mentions

This very much changed my whole timeline (for the better). Turns out, I follow people for their announcements, what they work on, what they’re doing, what they’re thinking about, etc. I actually don’t want to see 2 people communicating publicly using @ mentions, unless it’s a topic I’m interested in. So I started hiding all tweets that start with an @ symbol using a simple Tweetbot regex

^@

If I want to see responses to a tweet, I’d swipe to the left side, and see all replies.

Muted Clients

Muting certain clients has been amazing, very easy to set up and cleans up your timeline a lot. Some of the clients I mute:

- Buffer (to avoid “content marketing”, so many companies make the mistake of tweeting the same posts every week or so using Buffer)

- IFTTT (lots of people use that to auto-post not original content)

- Spotify

- Foursquare (I follow friends on Swarm already, no need to see it twice)

Secret Lists

One issue I had was to balance the number of tweets in my timeline, and then also being polite and following friends. To avoid the whole “Why are you not following me?” conversation, I now use a private list to follow about 300 people only. I open sourced the script I used to migrate all the people I used to follow over to a private list.

Disable RTs

This has been a great change: As described above, I follow people for what they do, what they think of, and what they’re working on. Some people have the habit of RTing content that might be interesting, but not relevant to why I want to stay subscribed to their tweets. On Tweetbot, you can.

Muting hashtags

I thank everyone for using hashtags for certain events, making it easy to hide them from my timeline :)

Disadvantages of this approach

Some of the newer Twitter features don’t have an API, and therefore can’t be offered by Tweetbot. This includes Polls, Moments and Group DMs. Since I don’t want to miss group DMs, I set up email notifications for Twitter DMs, and set up a Gmail filter to auto-archive emails that are not from group DMs.

Summary

I’ve spent quite some time optimizing that workflow, and it’s very specific, and probably not useful for most people. I try to minimize my time on social media, I only browse my Twitter feed when I have a few minutes to kill on the go. Meaning I work through my timeline only on my iPhone, and reply to mentions and DMs only on my Mac. I don’t want to come across uninterested, I do follow people on Facebook, I do read news and stay up to date. Twitter is a place for very specific content for me, and I want to keep using it as that.

Tags: twitter | Edit on GitHub

iOS Privacy: Track website activities, steal user data & credentials and add your own ads to any website in your iOS app

Background

Most iOS apps need to show external web content at some point. Apple provided multiple ways for a developer to do so, the official ones are:

Launch a URL in Safari

This will use the app switcher to move your own app into the background. This way, the user has their own browser (Safari), with their session and content blocker, browser plugins (e.g. 1Password), etc. As launching Safari puts your app into the background, many app developers are worried the user doesn’t come back to them.

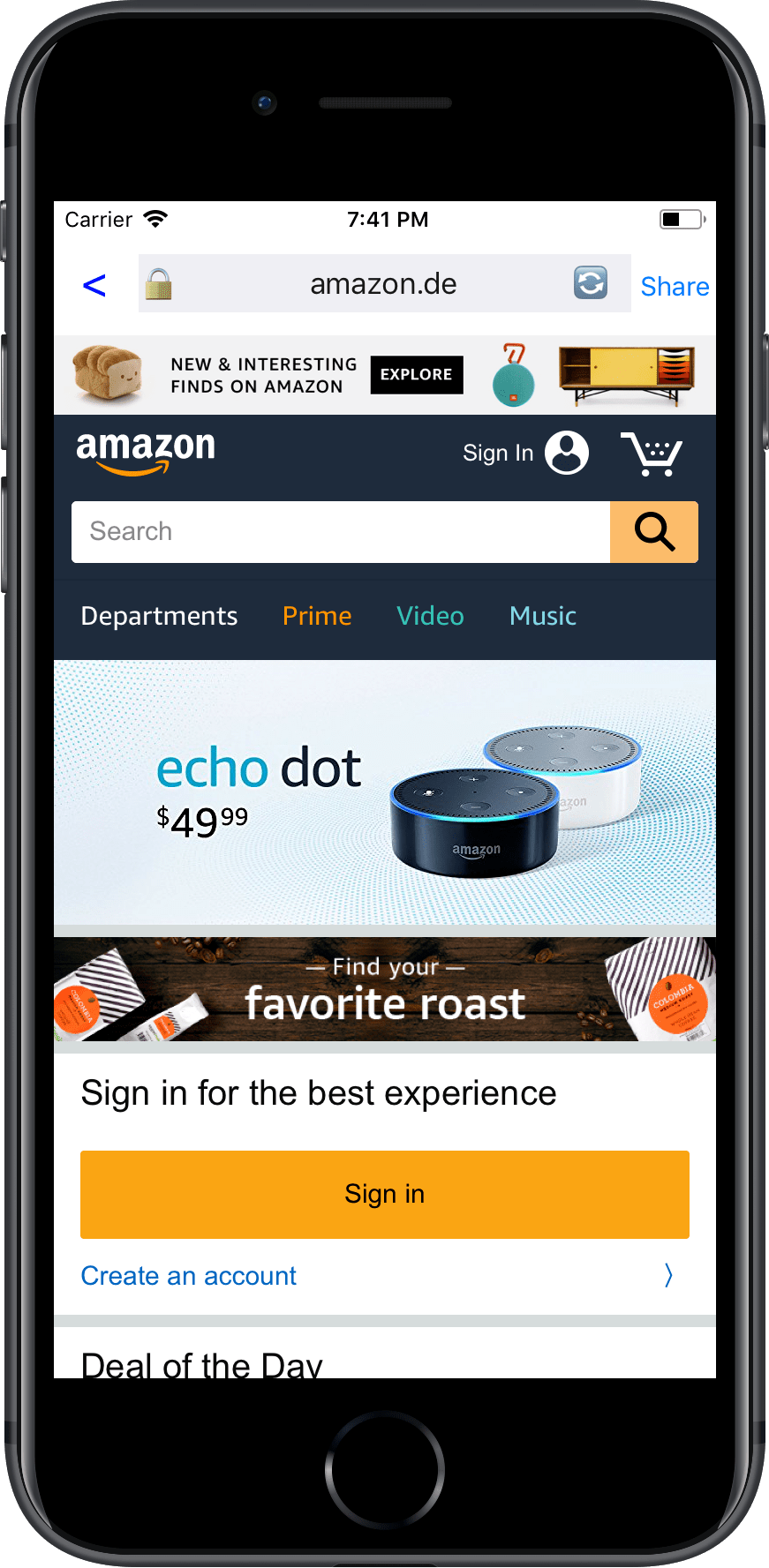

Check out the first video to see how this looks in action ➡️

Use in-app SFSafariViewController

Many third party iOS apps use this approach (e.g. Tweetbot).

It allows an app developer to use the built-in Safari with all its features, without making the user leave your application. It features all the Safari features, but from within your application.

Check out the second video to see how this looks in action ➡️

Current state with larger social network apps

Many larger iOS apps re-implemented their own in-app web browser. While this was necessary many years ago, nowadays it’s not only not required any more, it actually adds a major risk to the end-user.

Those custom in-app browsers usually use their own UI elements:

- Custom address bar

- Custom SSL indicator

- Custom share button

- Custom reload button

Problems with custom in-app browsers

If an app renders their own WKWebView, they not only cause inconvenience for the user, but they actually put them at serious risk.

Convenience

User session

The user’s login session isn’t available, meaning if you get a link to e.g. an Amazon product, you now have to login and enter your 2-factor authentication code to purchase a product.

Browser extensions

If the user has browser extensions (like password managers), they won’t have access to them in a custom in-app browser.

Deep linking

Deep linking itself has multiple open issues on the iOS platform. By using a custom in-app browser, it adds an extra layer that doesn’t work well with deep linking. Instead of opening the Amazon app when tapping on an Amazon link in “Social Media App X”, it opens the product in a plain web-view, with no login session, and no way to open the product in the app.

Content blockers

If the user has content blockers installed, they’re not being used by custom in-app browsers.

Bookmarks

There is no way for the user to store the current URL in their bookmarks.

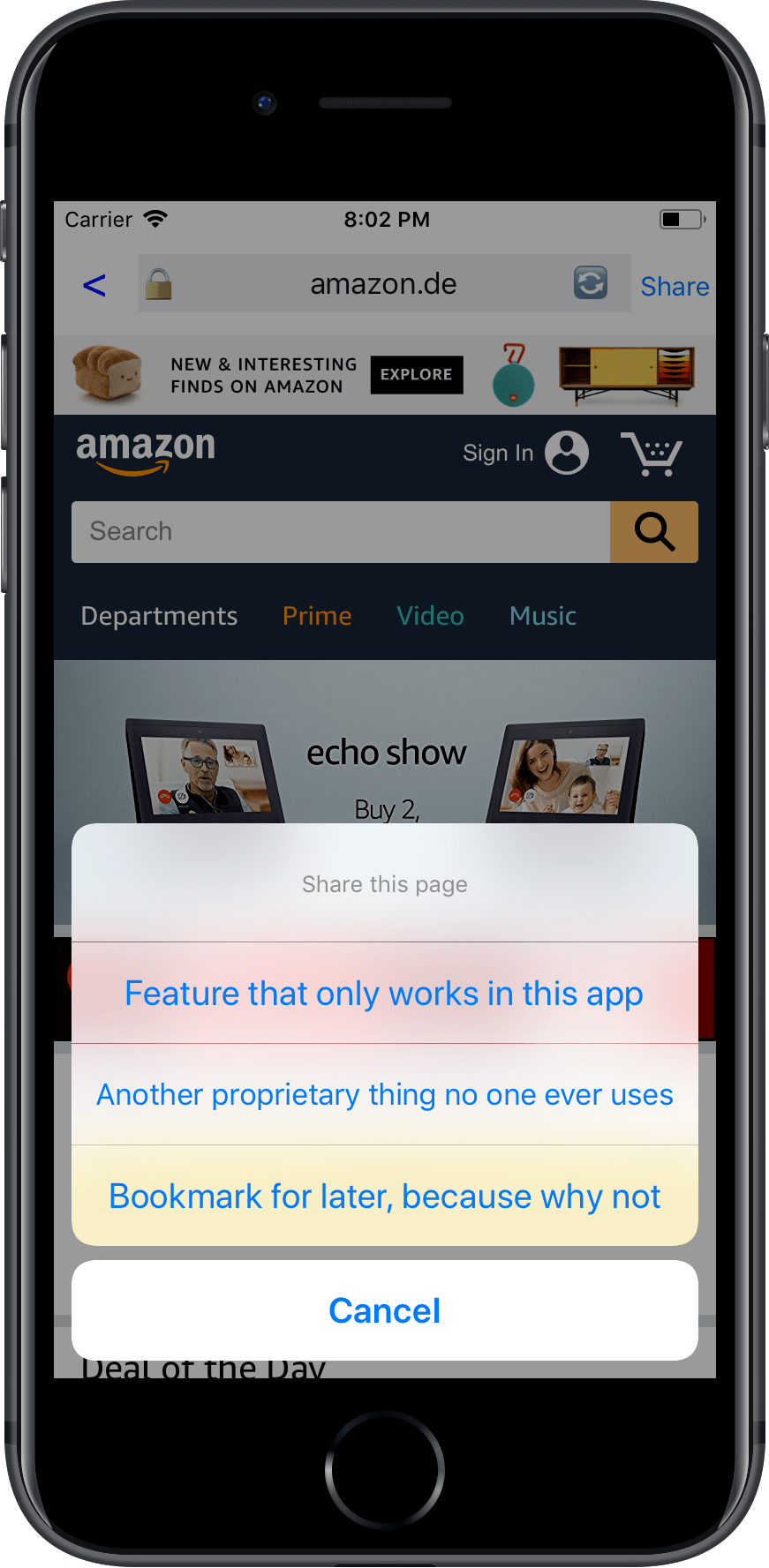

Share a website

Apps use this opportunity to force their users to use whatever “social features” they think are useful to them. Usually that means locking the user into their ecosystem, and not allowing people to share the content on the platform of their choice. There should be an explicit App Store rule against this.

Security & Privacy

Using a custom in-app browser, allows the app developer to inject ANY JavaScript code into the website the user visits. This means, any content, any data and any input that is shown or stored on the website is accessible to the app.

Analytics

This is basically the main reason why in-app browsers are still a thing: It allows the app maintainer to inject additional analytics code, without telling the user. This way, the app’s developer can track the following:

- How long does the user visit the linked website?

- How fast does the user scroll?

- Which links does the user open, and how long do they stay on each of them?

- Combined with watch.user, the app can record you while you browse third party websites, or even use the iPhone X face sensor to parse your face

- Every single tap, swipe or any other gesture

- Device movements, GPS location (if granted) and any other granted iOS sensor, while the app is still in the foreground.

User credentials

Any app with an in-app browser can easily steal the user’s email address, passwords and two-factor authentication codes. They can do that by injecting JavaScript code that bridges the data over to the app, or directly to a remote host. This is simple, it’s basically code like this:

email = document.getElementById("email").value

password = document.getElementById("password").value

That’s all that’s needed: just inject the code above to every website, run it on every user’s key stroke, and you’ll get a nice list of email addresses and passwords.

To run JavaScript in your own web view, you can just use

NSString *script = @"document.getElementById('password').value";

[self evaluateJavaScript:script completionHandler:^(id result, NSError *error) { ... }];

User data

Once the user is logged in, you also get access to the full HTML DOM + JavaScript data & events, which means you have full access to whatever the user sees. This includes things like your emails, your Amazon order history, your friend list, or whatever other data/website you access from an in-app web view.

HTTPs

Usually the web browser has a standardised way of indicating the SSL certificate next to the browser’s URL. In the case of custom in-app browsers, the SSL logo is being added by the app’s author, meaning you trust the app’s maintainer to only show the logo if it’s actually a valid SSL certificate.

Ads

Custom in-app browsers allow all app developers to inject their own ad system into any website that’s shown as part of their app. But not only that, they can replace the ads identifier of ads that are already shown on the website, so that the revenue goes directly to them, instead of the website owner.

And more

These are just some of the things that immediately come to my mind, every time I use an in-app browser, there are probably a lot more evil things a company or SDK could be doing.

How can we solve this?

- Reject apps that don’t use SFSafariViewController or launch Safari directly to show third party website content

- There should be exceptions, e.g. if a webview is used to show parts of the UI, or dynamic content, but it should be illegal to use webviews to show a linked or third party website

I also filed a radar for this issue.

Similar projects I’ve worked on

I published more posts on how to access the camera, the user’s location data, their Mac screen and their iCloud password, check out krausefx.com/privacy for more.

Tags: security, privacy, sdks | Edit on GitHub

Trusting third party SDKs

Update 2020: Google Chrome has fixed this issue

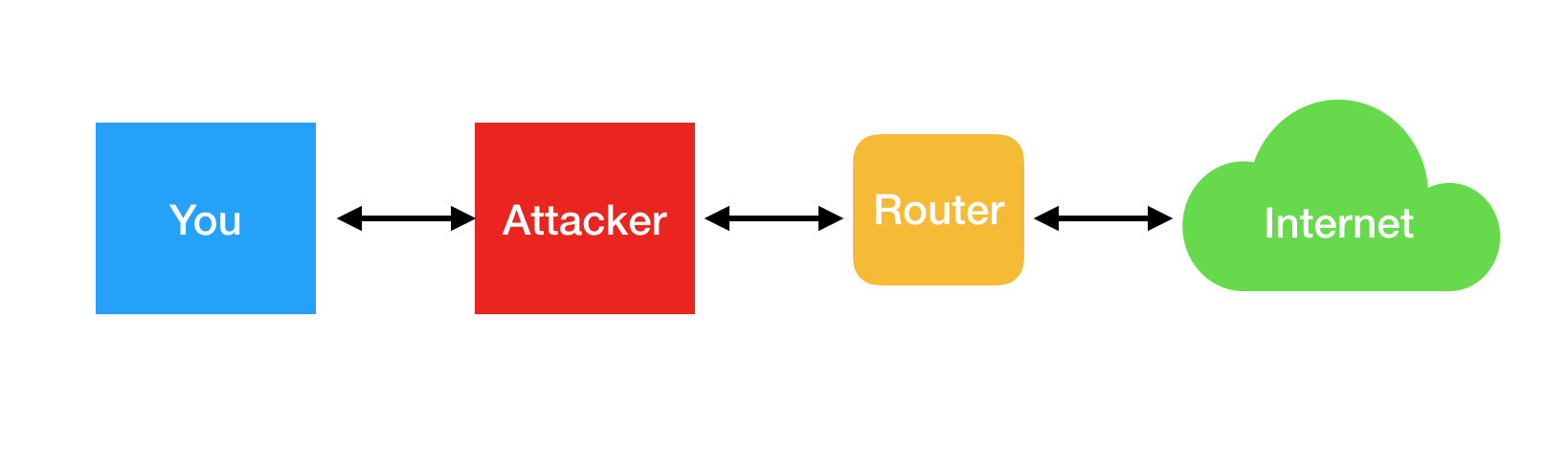

Third-party SDKs can often easily be modified while you download them! Using a simple person-in-the-middle attack, anyone in the same network can insert malicious code into the library, and with that into your application, as a result running in your user’s pockets.

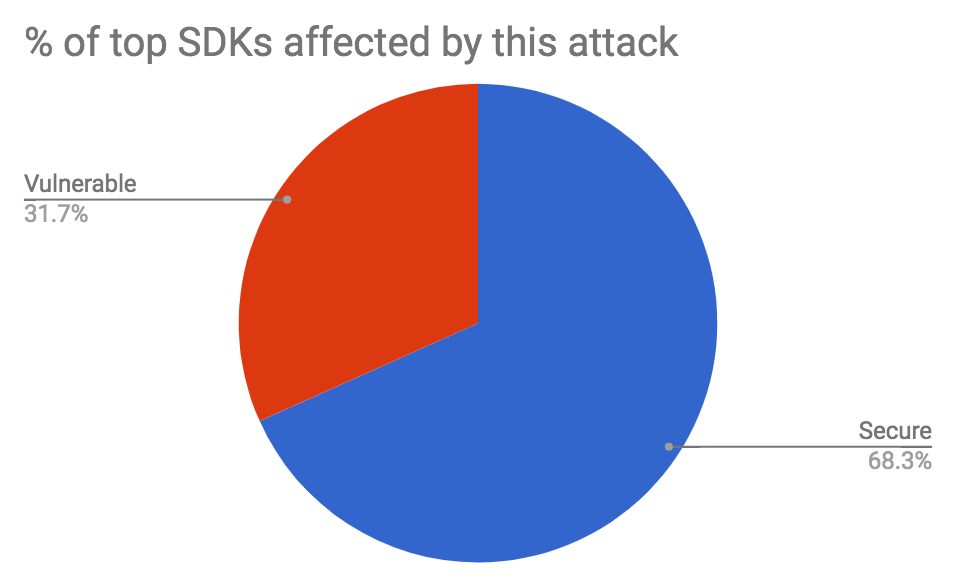

31% of the most popular closed-source iOS SDKs are vulnerable to this attack, as well as a total of 623 libraries on CocoaPods. As part of this research I notified the affected parties, and submitted patches to CocoaPods to warn developers and SDK providers.

What are the potential consequences of a modified SDK?

It’s extremely dangerous if someone modifies an SDK before you install it. You are shipping your app with that code/binary. It will run on thousands or millions of devices within a few days, and everything you ship within your app runs with the exact same privileges as your app.

That means any SDK you include in your app has access to:

- The same keychain your app has access to

- Any folders/files your app has access to

- Any app permissions your app has, e.g. location data, photo library access

- iCloud containers of your app

- All data your app exchanges with a web server, e.g. user logins, personal information

Apple enforces iOS app sandboxing for good reasons, so don’t forget that any SDK you include in your app runs inside your app’s sandbox, and has access to everything your app has access to.

What’s the worst that a malicious SDK could do?

- Steal sensitive user data, basically add a keylogger for your app, and record every tap

- Steal keys and user’s credentials

- Access the user’s historic location data and sell it to third parties

- Show phishing pop-ups for iCloud, or other login credentials

- Take pictures in the background without telling the user

The attack described here shows how an attacker can use your mobile app to steal sensitive user data.

Web Security 101

To understand how malicious code can be bundled into your app without your permission or awareness, I will provide necessary background to understanding how a MITM attack works and how to avoid it.

The information below is vastly simplified, as I try to describe things in a way that a mobile developer without too much network knowledge can get a sense of how things work and how they can protect themselves.

HTTPS vs HTTP

HTTP: Unencrypted traffic, anybody in the same network (WiFi or Ethernet) can easily listen to the packets. It’s very straightforward to do on unencrypted WiFi networks, but it’s actually almost as easy to do so on a protected WiFi or Ethernet network. There is no way for your computer to verify the packets came from the host you requested data from; Other computers can receive packets before you, open and modify them and send the modified version to you.

HTTPS: With HTTPS traffic other hosts in the network can still listen to your packets, but can’t open them. They still get some basic metadata like the host name, but no details (like the body, full URL, …). Additionally your client also verifies that the packets came from the original host and that no one on the way there modified the content. HTTPS is based on TLS.

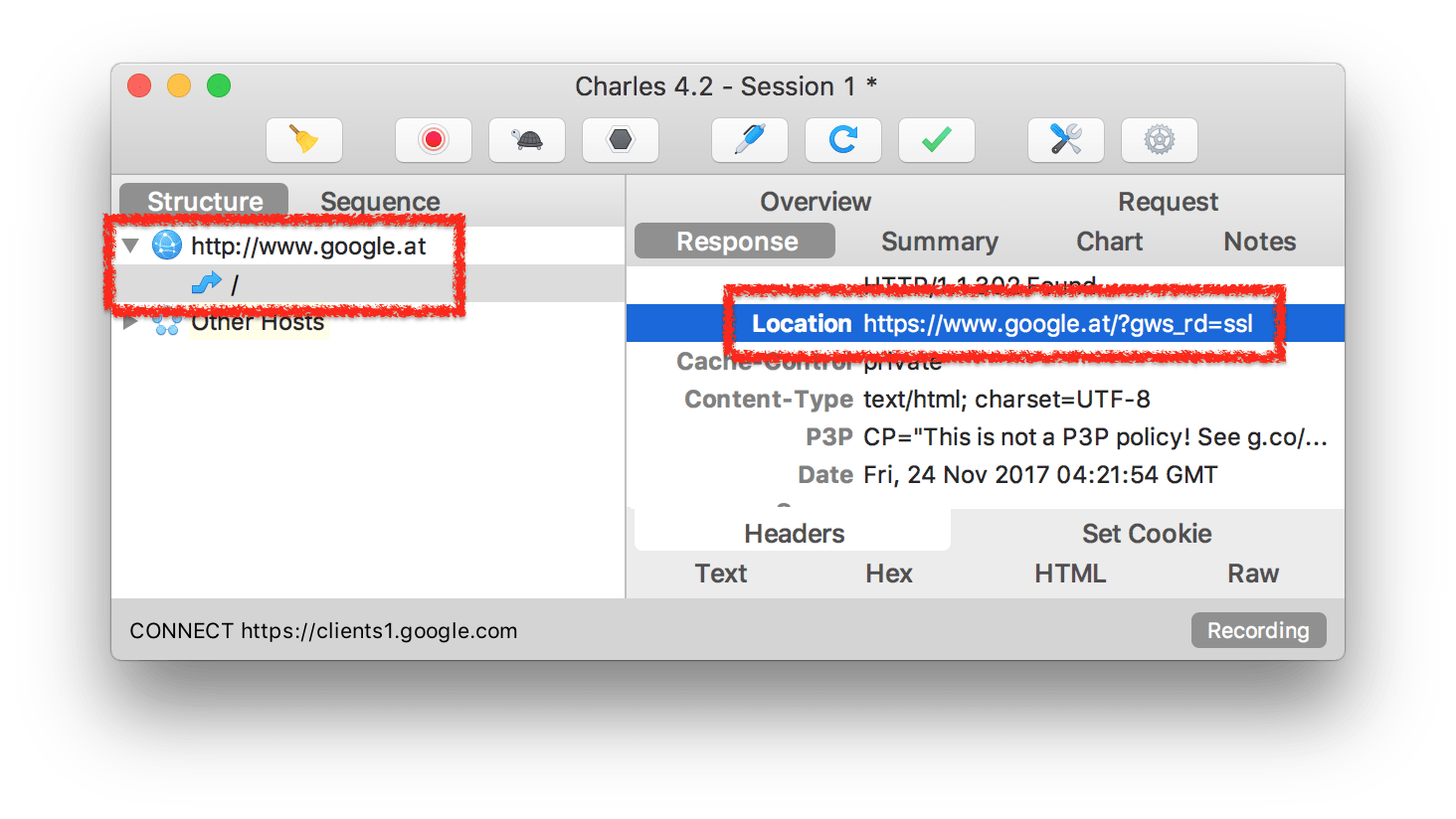

How a browser switches from HTTP to HTTPS

Enter “http://google.com” in your web browser (make sure to use “http”, not “https”). You’ll see how the browser automatically switches from the unsafe “http” protocol to “https”.

This switch doesn’t happen in your browser but comes from the remote server (google.com), as your client (in this case the browser) can’t know what kind of protocol is supported by the host. (Exception for hosts that make use of HSTS)

The initial request happens via “http”, so the server has no choice but to respond in clear text “http” to tell the client to switch over to the secure “https” protocol with a “301 Moved Permanently” response code.

You probably already see the problem here: since the response is being sent in clear text also, an attacker can modify that particular packet and replace the redirect destination URL to stay unencrypted “http”. This is called SSL Stripping, and we’ll talk more about this later.

How network requests work

Very simplified, network requests work on multiple layers. Depending on the layer, different information is available on how to route a packet:

- The lowest layer (Data Link Layer) uses MAC addresses to identify hosts in a network

- The layer above (Network Layer) uses IP addresses to identify hosts in the network

- The layers above add port information and the actual message content

If you’re interested, you can learn how the OSI (Open Systems Interconnection) model works, in particular the implementation TCP/IP (e.g. http://microchipdeveloper.com/tcpip:tcp-ip-five-layer-model).

So, if your computer now sends a packet to the router, how does the router know where to route the packet based on the first layer (MAC addresses)? To solve this problem, the router uses a protocol called ARP (Address Resolution Protocol).

How ARP works and how it can be abused

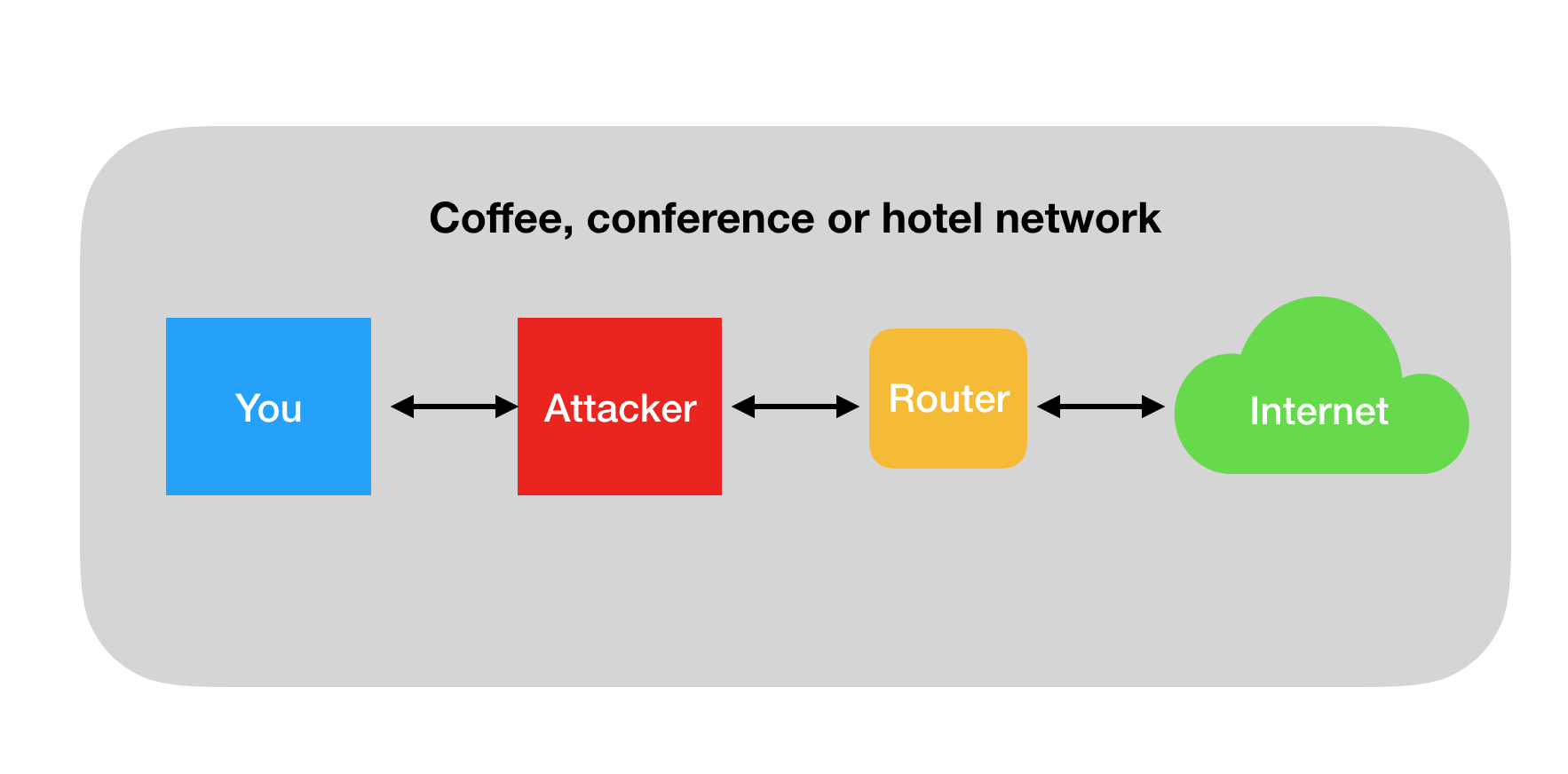

Simplified, the devices in a network use ARP mapping to remember where to send packets of a certain MAC address. The way ARP works is simple: if a device wants to know where to send a packet for a certain IP address, it asks everyone in the network: “Which MAC address belongs to this IP?”. The device with that IP then replies to this message ✋

Unfortunately, there is no way for a device to authenticate the sender of an ARP message. Therefore an attacker can be fast in responding to ARP announcements sent by another device, basically saying: “Hey, please send all packets that should go to IP address X to this MAC address”. The router will remember that and use that information for all future requests. This is called “ARP poisoning.”

See how all packets are now routed through the attacker instead of going directly from the remote host to you?

As soon as the packets go through the attacker’s machine there is some risk. It’s the same risk you have when trusting your ISP or a VPN service: if the services you use are properly encrypted, they can’t really know details about what you’re doing or modify packets without your client (e.g. browser) noticing. As mentioned before there is still basic information that will always be visible such as certain metadata (e.g. the host name).

If there are web packets that are unencrypted (say HTTP) the attacker can not only look inside and read their content, but can also modify anything in there with no way of detecting the attack.

Note: the technique described above is different from what you might have read about the security issues with public WiFi networks. Public WiFis are a problem because everybody can just read whatever packets are flying through the air, and if they’re unencrypted HTTP, it’s easy to read what’s happening. ARP pollution works on any network, no matter if public or not, or if WiFi or ethernet.

Let’s see this in action

Let’s look into some SDKs and how they distribute their files, and see if we can find something.

CocoaPods

Open source Pods: CocoaPods uses git under the hood to download code from code hosting services like GitHub. The git:// protocol uses ssh://, which is similarly encrypted to HTTPS. In general, if you use CocoaPods to install open source SDKs from GitHub, you’re pretty safe.

Closed source Pods: When preparing this blog post, I noticed that Pods can define a HTTP URL to reference binary SDKs, so I submitted multiple pull requests (1 and 2) that got merged and released with CocoaPods 1.4.0 to show warnings when a Pod uses unencrypted http.

Crashlytics SDK

Crashlytics uses CocoaPods as the default distribution, but has 2 alternative installation methods: the Fabric Mac app and manual installation, which are both https encrypted, so not much we can do here.

Localytics

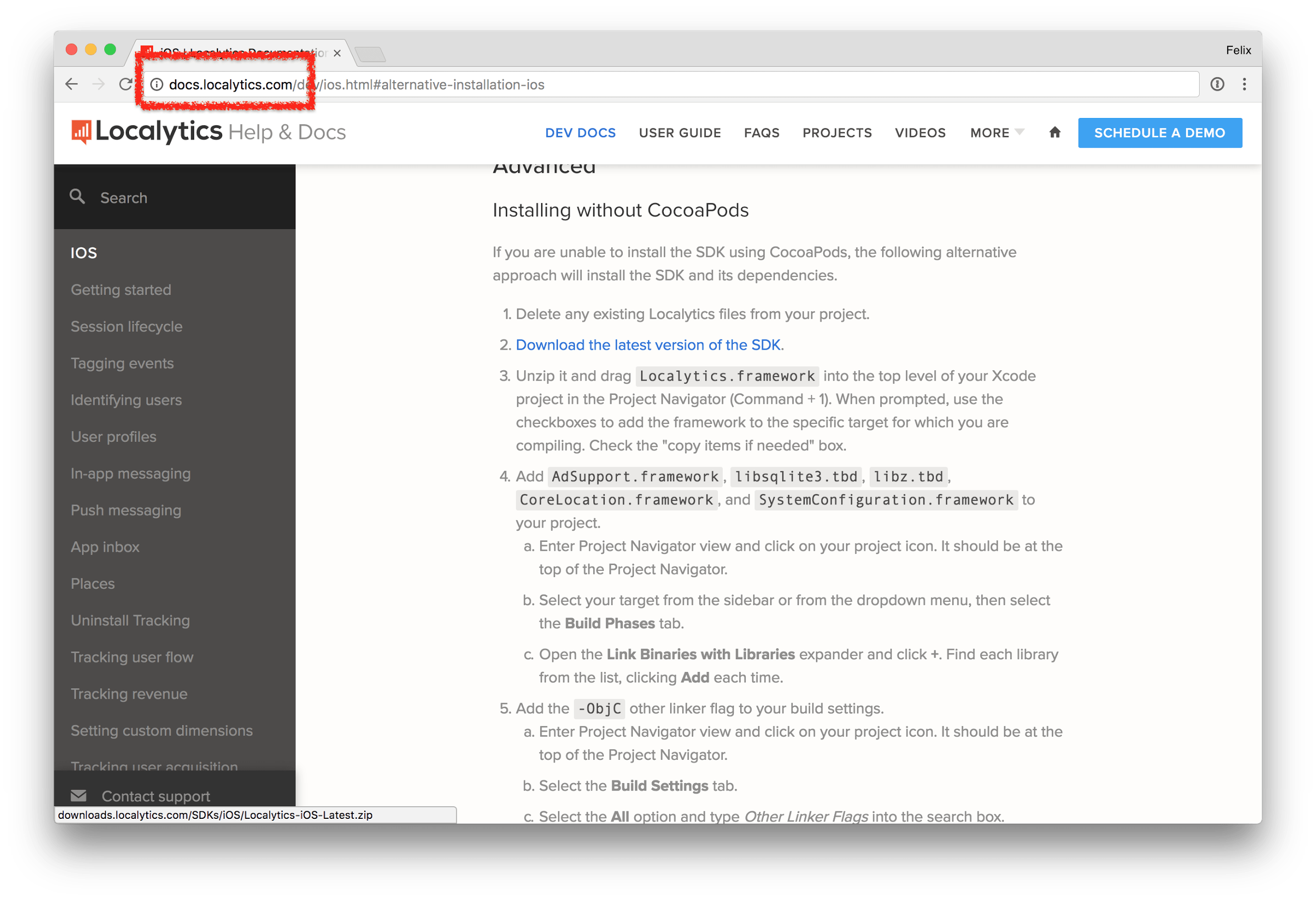

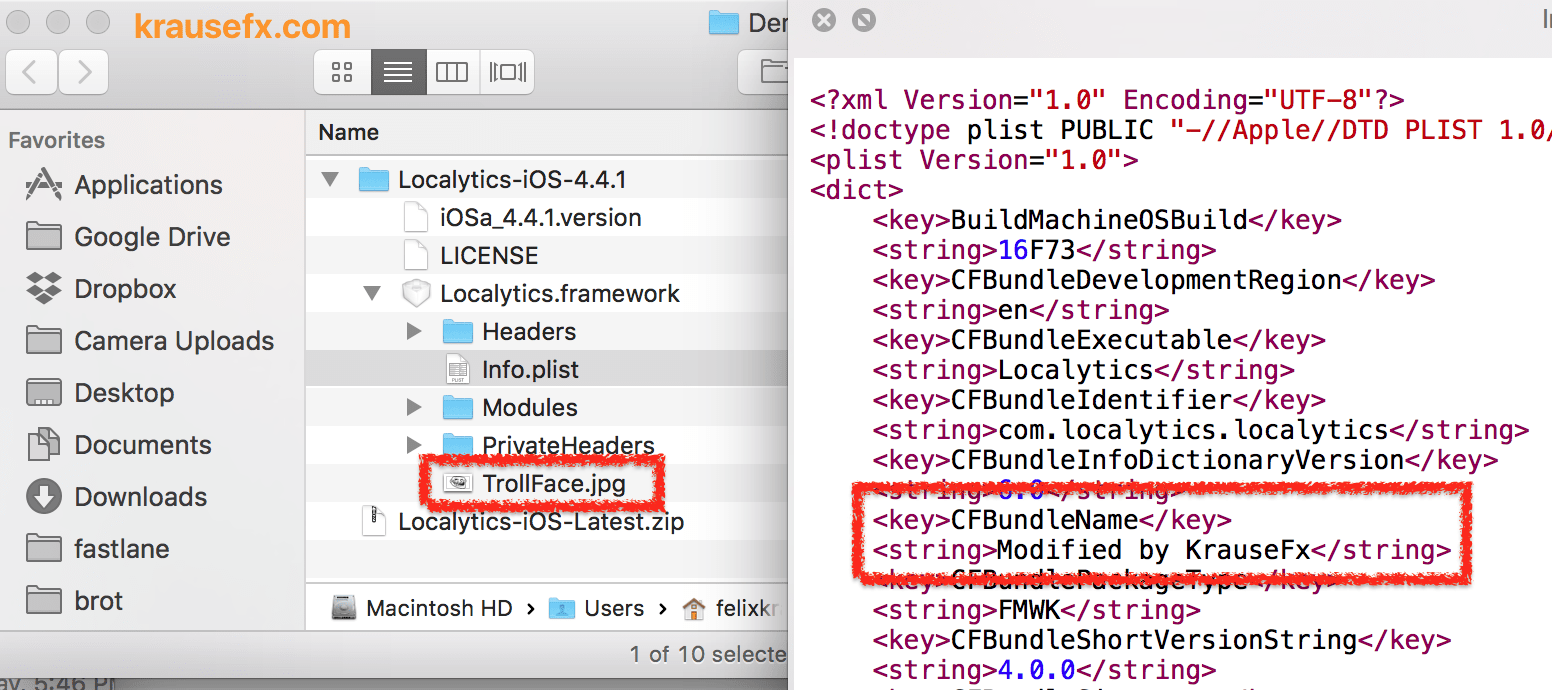

Let’s look at a sample SDK, the docs page is unencrypted via http (see the address bar)

So you might think: “Ah, I’m just reading the docs here, I don’t care if it’s unencrypted”. The problem here is that the download link (in blue) is also transferred as part of the website, meaning an attacker can easily replace the https:// link with http://, making the actual file download unsafe.

Alternatively an attacker could just switch the https:// link to the attacker’s URL that looks similar

- https://s3.amazonaws.com/localytics-sdk/sdk.zip

- https://s3.amazonaws.com/localytics-sdk-binaries/sdk.zip

And there is no good way for the user to verify that the specific host, URL or S3 bucket belongs to the author of the SDK.

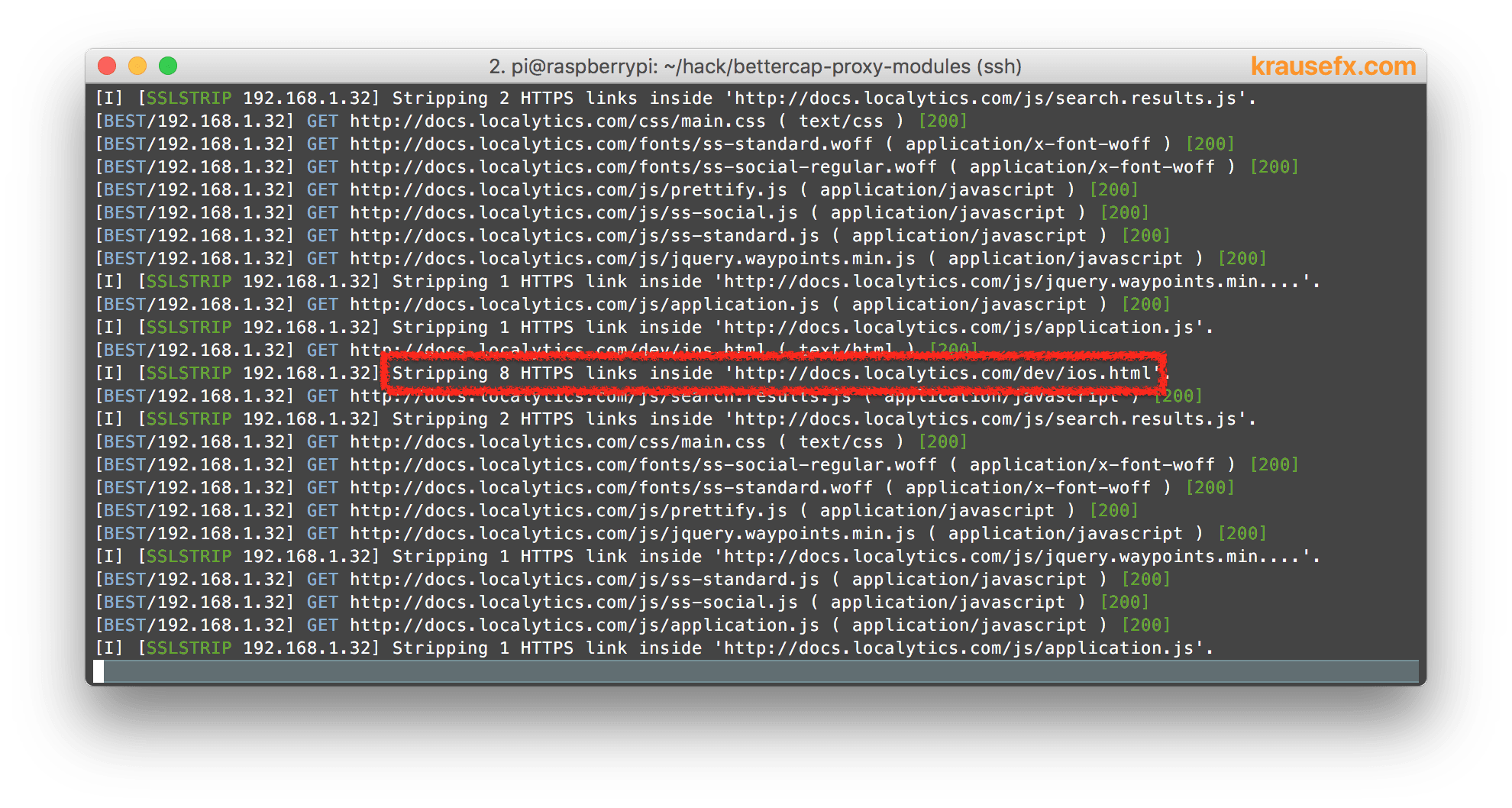

To verify this I’ve set up my Raspberry PI to intercept the traffic and do various SSL Stripping (downgrading of HTTPS connections to HTTP) across the board, from JavaScript files, to image resources and of course download links.

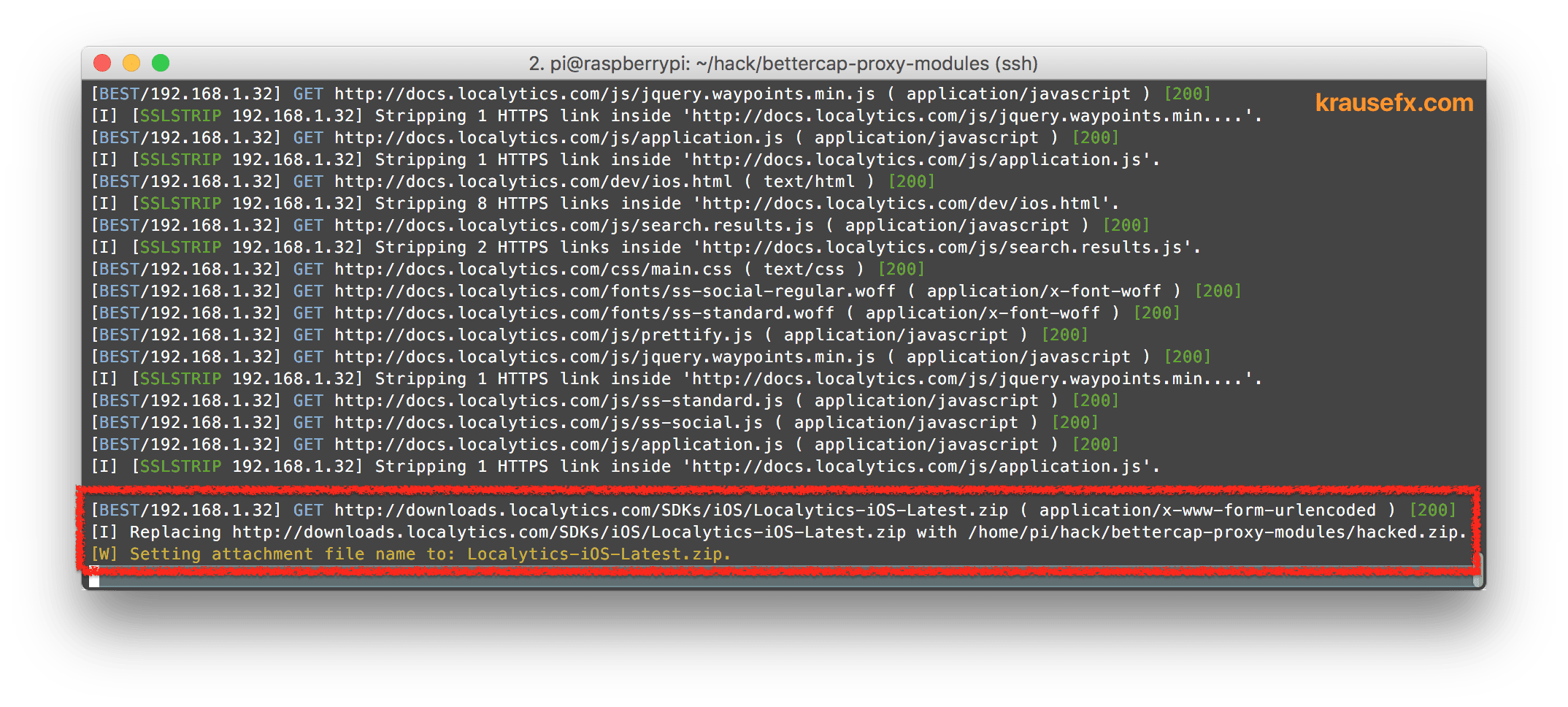

Once the download link was downgraded to HTTP, it’s easy to replace the content of the zip file as well:

Replacing HTML text on the fly is pretty easy, but how can an attacker replace the content of a zip file or binary?

- The attacker downloads the original SDK

- The attacker inserts malicious code into the SDK

- The attacker compresses the modified SDK

- The attacker looks at packets coming by, and jumps in to replace any zip file matching a certain pattern with the file the attacker prepared

(This is the same approach used by the image replacement trick: Every image that’s transferred via HTTP gets replaced by a meme)

As a result, the downloaded SDK might include additional files or code that was modified:

For this attack to work, the requirements are:

- The attacker is in the same network as you

- The docs page is unencrypted and allows SSL Stripping on all links

Localytics resolved the issue after disclosing it, so both the docs page, and the actual download are now HTTPS encrypted.

AskingPoint

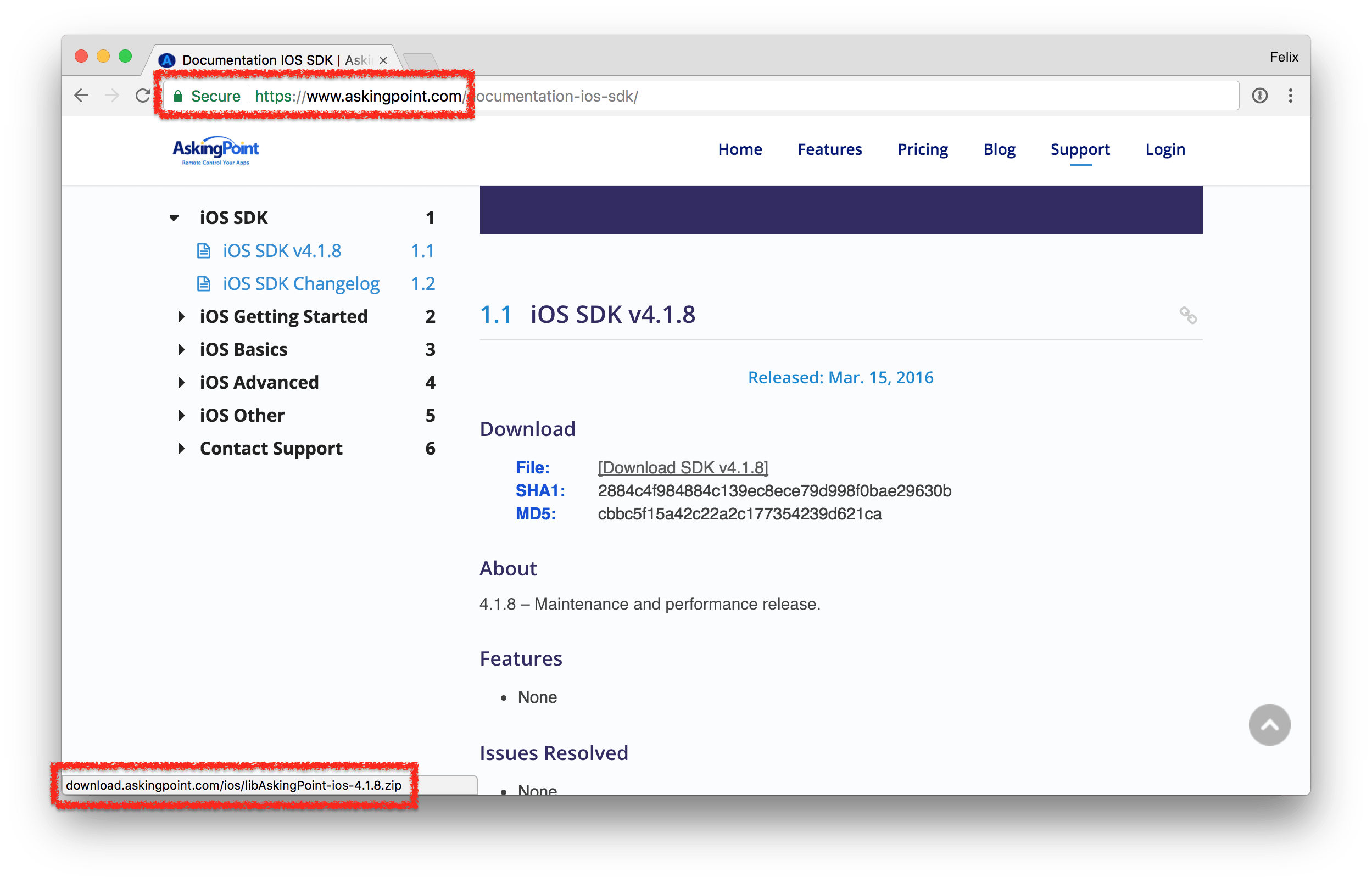

Looking at the next SDK, we have a HTTPS encrypted docs page, looking at the screenshot, this looks secure:

Turns out, the HTTPS based website links to an unencrypted HTTP file, and web browsers don’t warn users in those cases (some browsers already show a warning if JS/CSS files are downloaded via HTTP). It’s almost impossible for the user to detect that something is going on here, except if they were to actually manually compare the hashes provided. As part of this project, I filed a security report for both Google Chrome (794830) and Safari (rdar://36039748) to warn the user of unencrypted file downloads on HTTPS sites. (This has been resolved by Google Chrome)

AWS SDK

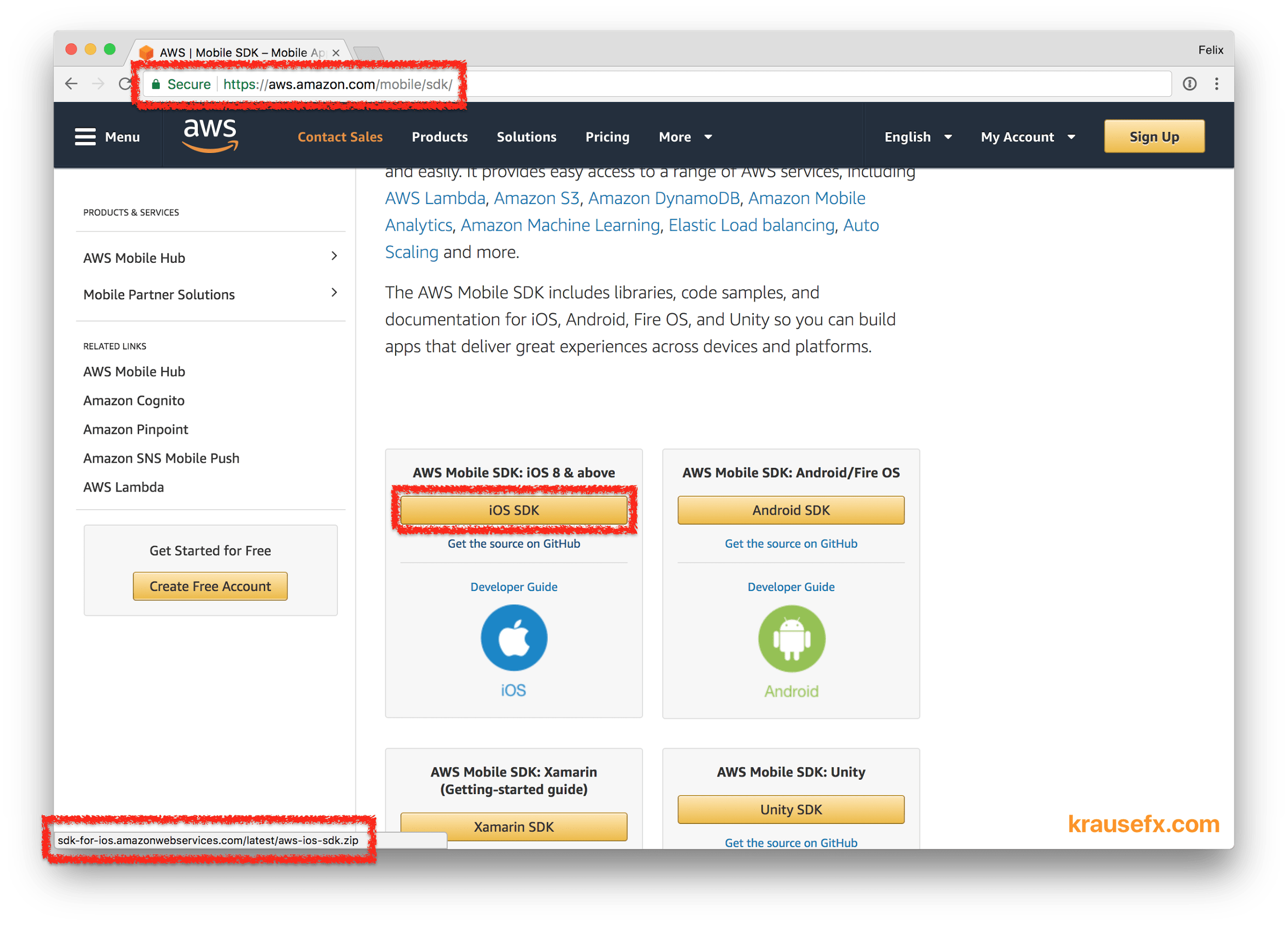

At the time I was conducting this research, the AWS iOS SDK download page was HTTPS encrypted, however linked to a non-encrypted zip download, similarly to the SDKs mentioned before. The issue has been resolved after disclosing it to Amazon.

Putting it all together

Thinking back about the iOS privacy vulnerabilities mentioned before (iCloud phishing, location access through pictures, accessing camera in background), what if we’re not talking about evil developers trying to trick their users… What if we talk about attackers that target you, the iOS developer, to reach millions of users within a short amount of time?

Attacking the developer

What if an SDK gets modified as you download it using a person-in-the-middle attack, and inserts malicious code that breaks the user’s trust? Let’s take the iCloud phishing popup as an example, how hard would it be to use apps from other app developers to steal passwords from the user for you, and send them to your remote server?

In the video below you can see a sample iOS app that shows a mapview. After downloading and adding the AWS SDK to the project, you can see how malicious code is being executed, in this case an iCloud phishing popup is shown and the cleartext iCloud password can be accessed and sent to any remote server.

The only requirement for this particular attack to work is that the attacker is in the same network as you (e.g. stays in the same conference hotel). Alternatively this attack can also be done by your ISP or the VPN service you use. My Mac runs the default macOS configuration, meaning there is no proxy, custom DNS or VPN set up.

Setting up an attack like this is surprisingly easy using publicly available tools that are designed to do automatic SSL Stripping, ARP pollution and replacing of content of various requests. If you’ve done it before, it will take less than an hour to set everything up on any computer, including a Raspberry Pi, which I used for my research. The total costs for the whole attack is therefore less than $50.

I decided not to publish the names of all the tools I used, nor the code I wrote. You might want to look into well-known tools like sslstrip, mitmproxy and Wireshark

Running arbitrary code on the developer’s machine

The previous example injected malicious code into the iOS app using a hijacked SDK. Another attack vector is the developer’s Mac. Once an attacker can run code on your machine, and maybe even has remote SSH access, the damage could be significant:

- Activate remote SSH access for the admin account

- Install keylogger to get admin password

- Decrypt the keychain using the password, and send all credentials to remote server

- Access local secrets, like AWS credentials, CocoaPods & RubyGems push tokens and more

- If a developer now has a popular CocoaPod, you can spread more malicious code through their SDKs

- Access literally any file and database on your Mac, including iMessage conversations, emails and source code

- Record the user’s screen without them knowing

- Install a new root SSL certificate, allowing the attacker to intercept most of your encrypted network requests

To prove that this is working, I looked into how to inject malicious code in a shell script developers run locally, in this case BuddyBuild:

- Same requirements as in the previous example, attacker needs to be in the same network

- BuddyBuild docs told users to

curlan unencrypted URL piping the content over tosh, meaning any code thecurlcommand returns will be executed - The modified

UpdateSDKis provided by the attacker (Raspberry PI), and asks for the admin password (normally BuddyBuild’s update script doesn’t ask for this) - Within under a second, the malicious script does the following

- Enable SSH remote access for the current account

- Install & setup a keylogger that auto-starts when you login

Once the attacker has the root password and SSH access, they can do anything listed above.

BuddyBuild resolved the issue after reporting it.

How realistic is such an attack?

Very! Open your Network settings on the Mac, and take a look at the list of WiFi networks your Mac was connected to. In my case, my MacBook was connected to over 200 hotspots. How many of them can you fully trust? Even in a trustworthy network, there could still be other machines that got hacked previously which are doing remote controlled attacks (see section above)

SDKs and developer tools become more and more a target for attackers. Some examples from the past years:

- Xcode Ghost affected about 4,000 iOS apps, including WeChat:

- Attacker gains remote access to any phone running the app

- Show phishing popups

- Access and modify the clipboard (dangerous when using password managers)

- The NSA worked on finding iOS exploits

- Pegasus: malware for non-jailbroken iPhones, used by governments

- KeyRaider: Only affected jailbroken iPhones, but still stole user-credentials from over 200,000 end-users

- Just the last few weeks, there have been multiple posts about how this affects web projects also (e.g. 1, 2)

and many, many more. Another approach is getting access to the download server (e.g. S3 bucket using access keys) and replacing the binary. This happened multiple times in the past few years, for example Transmission Mac app incident. This opens a whole new level of area of attack, which I didn’t cover in this blog post.

Conferences, hotels, coffee shops

Every time you connect to the WiFi at a conference, hotel or coffee shop, you become an easy target. Attackers know that there is a high number of developers during conferences and can easily make use of the situation.

How can SDK providers protect their users?

This would go out of scope for this blog post. Mozilla offers a security guide that’s a good starting point. Mozilla provides a tool called observatory that will do some automatic checks of the server settings and certificates.

How many of the most popular SDKs are affected by this vulnerability?

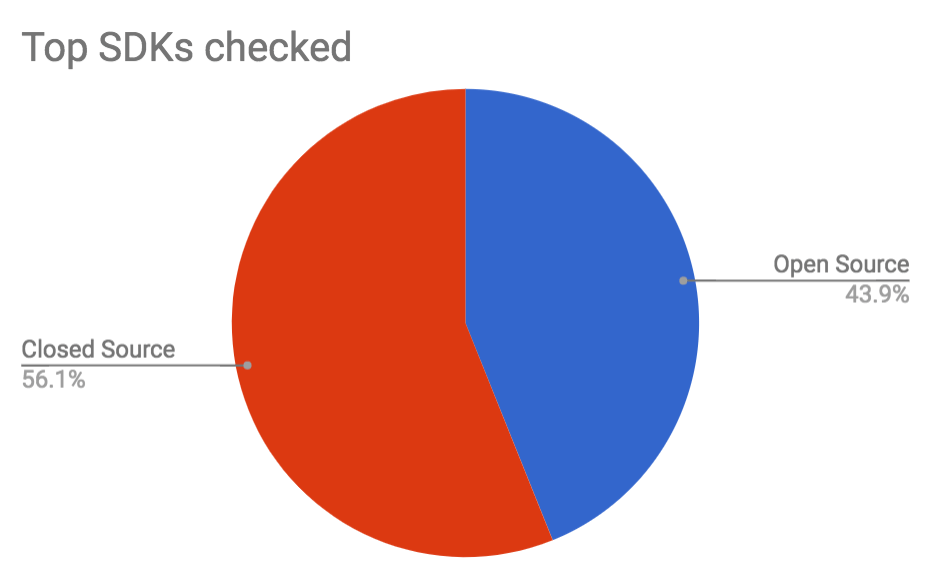

While doing this research starting on 23rd November 2017, I investigated 41 of the most popular mobile SDKs according to AppSight (counting all Facebook and Google SDKs as one, as they share the same installation method - skipping SDKs that are open source on GitHub)

- 41 SDKs checked

- 23 are closed source and you can only download binary files

- 18 of those are open source (all of them on GitHub)

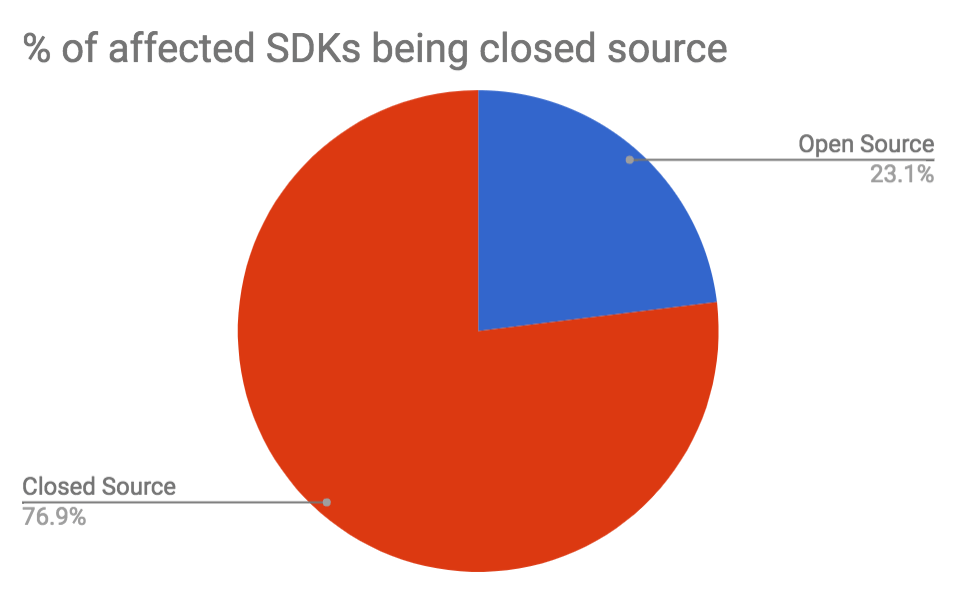

- 13 are an easy target of person-in-the-middle attacks without any indication to the user

- 10 of them are closed source SDKs

- 3 of them are open source SDKs, meaning the user can either download the SDK via unencrypted HTTP from the official website, or securely clone the source code from GitHub

- 5 of the 41 SDKs offer no way to download the SDK securely, meaning they don’t support any HTTPS at all, nor use a service that does (e.g. GitHub)

- 31% of the top used SDKs are easy targets for this attack

- 5 additional SDKs required an account to download the SDK (do they have something to hide?)

I notified all affected in November/December 2017, giving them 2 months to resolve the issue before publicly talking about it. Out of the 13 affected SDKs

- 1 resolved the issue within three business days

- 5 resolved the issue within a month

- 7 SDKs are still vulnerable to this attack at the time of publishing this post.

The SDK providers that are still affected haven’t responded to my emails, or just replied with “We’re gonna look into this” - all of them in the top 50 most most-used SDKs.

Looking through the available CocoaPods, there are a total of 4,800 releases affected, from a total of 623 CocoaPods. I generated this data locally using the Specs repo with the command grep -l -r '"http": "http://' *.

Open Source vs Closed Source

Looking the number above, you are much likely to be affected by attacks if you use closed source SDKs. More importantly: When an SDK is closed source, it’s much harder for you to verify the integrity of the dependency. As you probably know, you should always check the Pods directory into version control, to detect changes and be able to audit your dependency updates. 100% of the open source SDKs I investigated can be used directly from GitHub, meaning even the 3 SDKs affected are not actually affected if you make sure to use the version on GitHub instead of taking it from the provider’s website.

Based on the numbers above it is clear that in addition to not being able to dive into the source code for closed source SDKs you also have a much higher risk of being attacked. Not only person-in-the-middle attacks, but also:

- The attacker gains access to the SDK download server

- The company providing the SDK gets compromised

- The local government forces the company to include back-doors

- The company providing the SDK is evil and includes code & tracking you don’t want

You are responsible for the binaries you ship! You have to make sure you don’t break your user’s trust, European Union data protection laws (GDPR) or steal the user’s credentials via a malicious SDK.

Wrapping up

As a developer, it’s our responsibility to make sure we only ship code we trust. One of the easiest attack vectors right now is via malicious SDKs. If an SDK is open source, hosted on GitHub, and is installed via CocoaPods, you’re pretty safe. Be extra careful with bundling closed-source binaries or SDKs you don’t fully trust.

Since this type of attack can be done with little trace, you will not be able to easily find if your codebase is affected. By using open source code, we as developers can better protect ourselves, and with it, our customers.

Check out my other privacy and security related publications.

Update: Crowdsourced list of SDKs

Many people asked for a list of affected SDKs. Instead of publishing a static list, I decided to setup a repo where every developer can add and update SDKs, check it out on trusting-sdks/https.

Thank you

Special thanks to Manu Wallner for doing the voice recordings for the video.

Special thanks to my friends for providing feedback on this post: Jasdev Singh, Dave Schukin, Manu Wallner, Dominik Weber, Gilad, Nicolas Haunold and Neel Rao.

Tags: security, privacy, sdks | Edit on GitHub